TS Lombard: Can Fed cuts reflate the AI bubble? (10/03/2024)

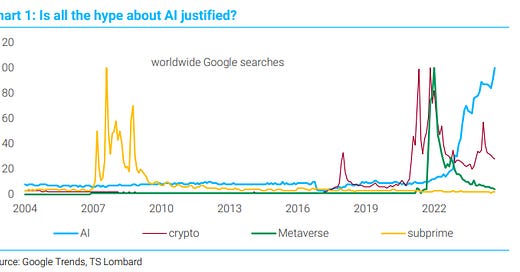

AI has the potential to boost productivity and GDP, although it is questionable whether it will match the boost from the Dotcom era, let alone “upend society” and deliver a “new machine age” or a “Third Industrial Revolution”. But if the Fed can repeat Alan Greenspan’s soft landing, who’s to say it won’t also recreate the “irrational exuberance” of the Greenspan era?

PICKS AND SHOVELS

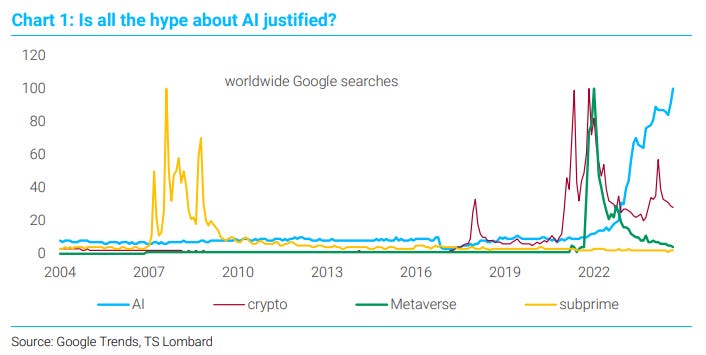

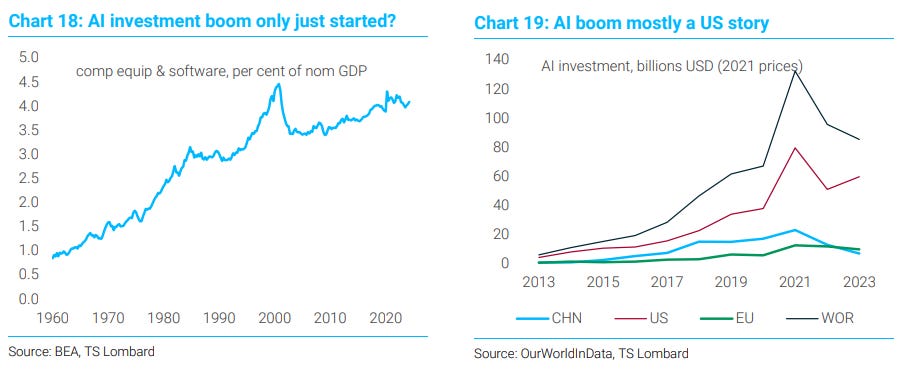

The AI theme has been a dominant driver of US stock-market performance ever since ChatGPT’s public release in late 2022. While the “picks and shovels” narrative (which has benefitted the likes of NVIDIA) probably has further to run, the big question now is whether we will start to see the broader benefits from AI technologies – particularly as a source of productivity and GDP growth.

BEYOND THE FOMO

We now have clear evidence that AI can boost worker efficiency in doing specific tasks, such as journalism, coding and customer services. But there is huge uncertainty about its potential to boost aggregate productivity, let alone transform the global economy. We should treat the most bullish forecasts – from management consultants, etc. – with a healthy dose of scepticism.

IRRATIONAL EXUBERANCE 2.0

Rationally, the prospect of Fed rate cuts and a soft landing should disproportionately benefit those stocks that are most exposed to economic weakness and tight policy (real estate, small cap, value stocks). We might see a rotation out of US tech. But if the mid-1990s is the policy template, there must be a decent chance that any AI-inspired “bubble” has further to inflate.

CAN FED CUTS REFLATE THE AI BUBBLE?

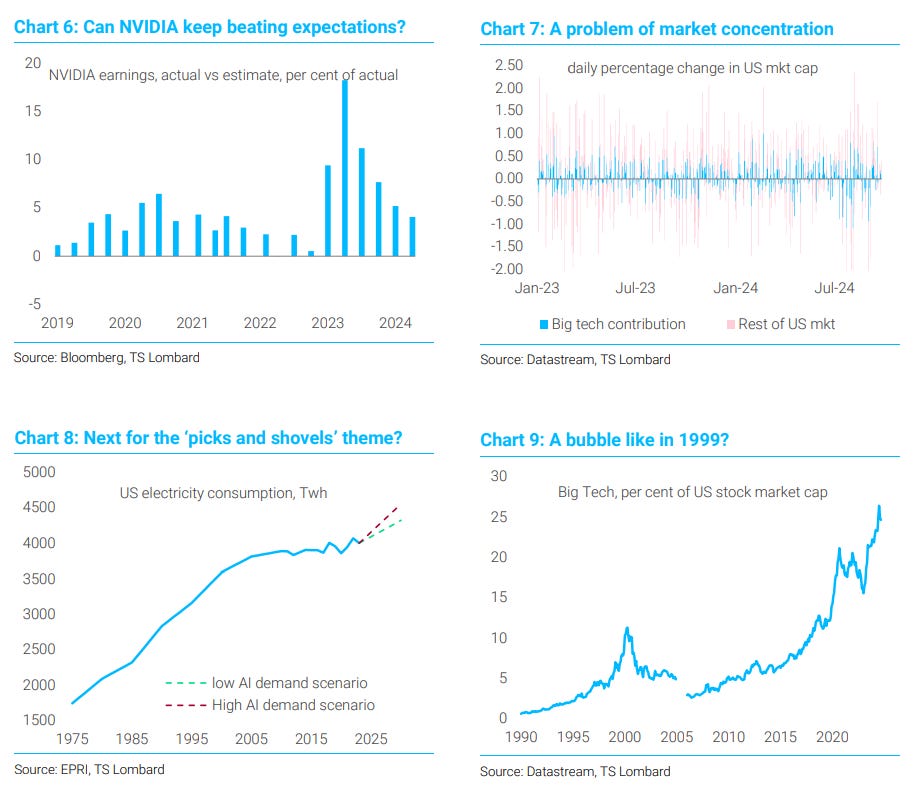

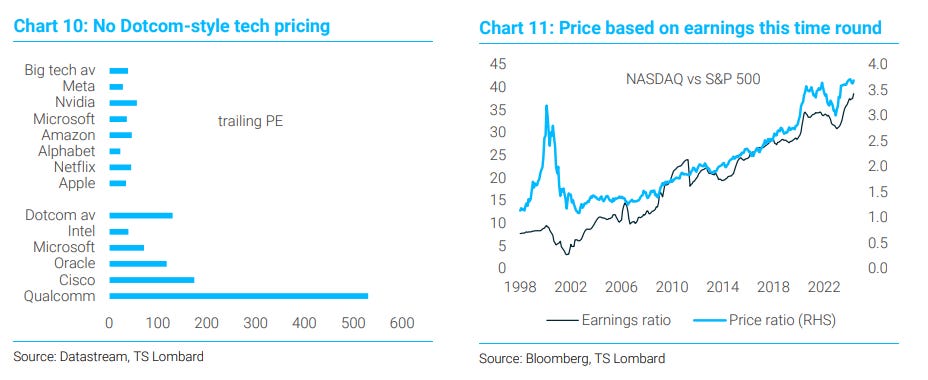

During the summer, we noticed increasing scepticism among investors about the US tech sector and the potential for AI technologies to transform the economy. Nothing sets a market narrative like the latest price action, and the likes of NVDIA had clearly lost momentum, which, given their outsized weight in US equity indices, had become a problem for the broader stock market. Of course, it was important to keep those market moves in perspective. With the NASDAQ up more than 60% since the launch of ChatGPT in late 2022, a two-month period of “consolidation” (aka treading water) is not exactly unusual, let alone alarming. And it was somewhat reassuring that the companies that had benefitted most from the AI theme were also those companies that had seen a very direct revenue boost from Big Tech investments in this technology, which is why valuations had stayed far below those seen in the Dotcom era. The likes of NIVIDIA were the “picks and shovels” of the AI revolution rather than a speculative punt on who would “discover gold”. While it is becoming increasingly hard for NVIDIA and co. to keep beating market expectations, the real question is now about the underlying technologies and whether AI can deliver the sort of efficiency gains that warrant such large-scale investments in the first place.

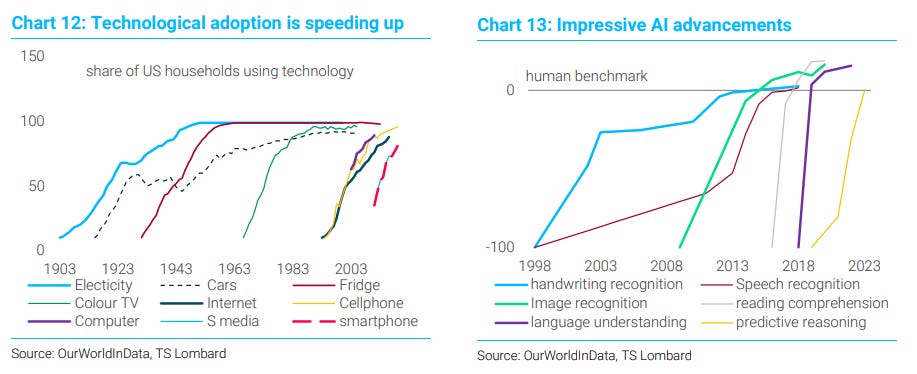

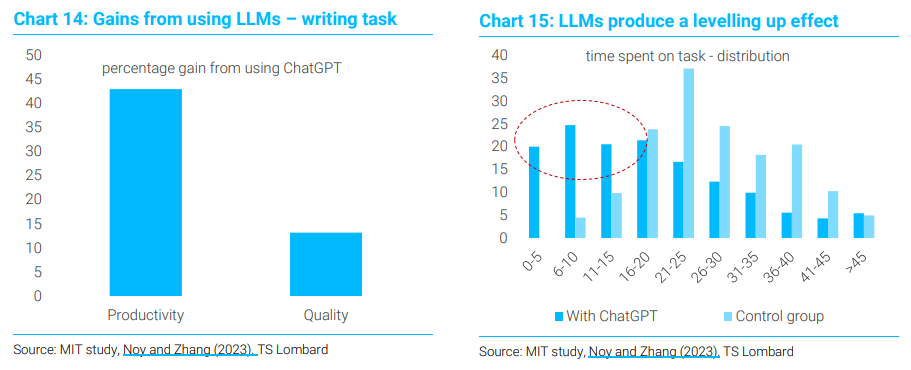

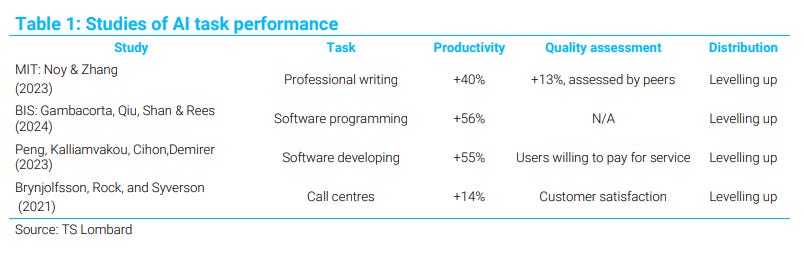

Macro economists have only recently started to take AI seriously, with a flurry of research activity over the past 18 months. Much of the focus has been on the ability of these technologies – especially Large Language Models (LLMs) – to generate efficiency gains in specific human tasks, such as computer programming, writing and customer services. So far, the evidence is encouraging, with AI delivering significant time savings – in the range of 15-60% – and major improvements in quality. Yet to translate these efficiency gains into a broader productivity boost, we need to take account of the proportion of tasks that are exposed to AI as well as the scope of these technologies to boost economy-wide innovation and scientific discovery. And these effects are hugely uncertain, which is why we are seeing big disagreements about what AI means for real GDP growth over the next decade. On one side of the debate, there are the management consultants such as Accenture and PwC, which say these technologies will totally transform the economy. On the other side, we have the gritty realism of the academic economists, who believe the impact of AI will be negligible. Right now, the spread between these two schools of thought is 20% pts of global GDP by 2035, which is laughably wide – even for economists.

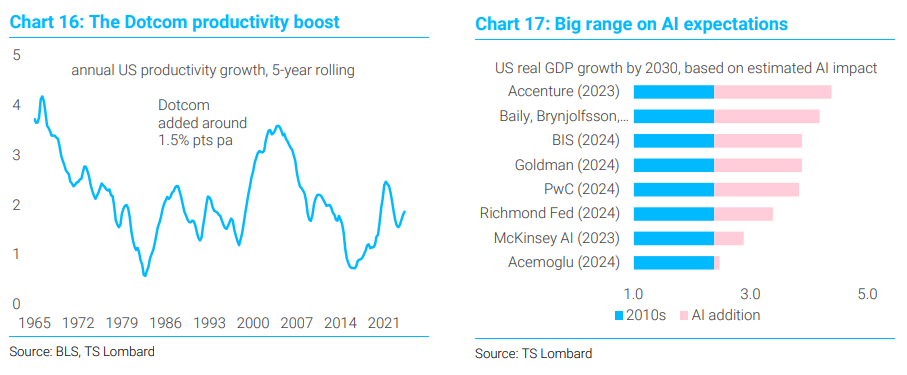

We side with the sceptics. While we are confident that AI will eventually boost productivity (particularly in the US), the impact could well fall short of the efficiency gains associated with Dotcom (i.e., an extra 2% pts of growth per annum), let alone match the more outlandish claims of industry insiders. LLMs continue to struggle with major weaknesses; and – contrary to what enthusiasts say – there is no guarantee that future iterations of the tech will overcome these problems or that the industry will continue along the “exponential curve” that is supposed to lead to Artificial General Intelligence (AGI). (This is not necessarily a bad thing, especially for labour markets, because it means the technology will be skill-augmenting rather than labour-replacing.) But our scepticism about the fundamentals of AI does not mean we are particularly bearish US tech stocks or that we are forecasting a major correction in NVIDIA and co. We suspect the twoyear mania in AI-related equities has further to run, especially now that the Fed has signalled its “commitment not to fall behind the curve”. While, in theory, a successful Fed intervention should disproportionately benefit other areas of the market (driving a rotation), the lesson from the 1990s is that soft landings can be fertile ground for “irrational exuberance”. Of course, there is one important difference between today and the New Economy of the Greenspan era – a less favourable geopolitical environment (with events in the Middle East providing a timely reminder).

1.PICKS AND SHOVELS

Two years ago, when the Federal Reserve started to raise interest rates, it looked like tighter monetary policy would fundamentally change the structure of stock markets. “Long duration” tech stocks would lose, while those sectors that were exposed to a stronger, high-pressure economy (“value” stocks, industrials etc) would outperform. And for a while this “rotation” was playing out. But that all changed with the launch of ChatGPT at the end of 2022. AI not only captured the imagination of the public; it revived the bullish narrative in US tech. Suddenly, investors were buying these stocks on the basis that they were “recession-proof” and had limited exposure to rising interest rates (because Big Tech had lots of free cash and only modest levels of debt – unlike the average small-cap stock). During the summer, however, we noticed that investor sentiment had flipped again. Scepticism about AI (and US Big Tech in general) had returned; and amid rising confidence in the soft landing, the “rotation” theme was suddenly back in vogue. Now we stand at a potentially important juncture. Was the AI theme just another tech bubble that is about to burst? Should we expect those stocks to underperform as the Fed cuts interest rates? Or could a 90s-style soft landing also deliver a 90s-style tech “melt-up”?

NVIDIA and beyond

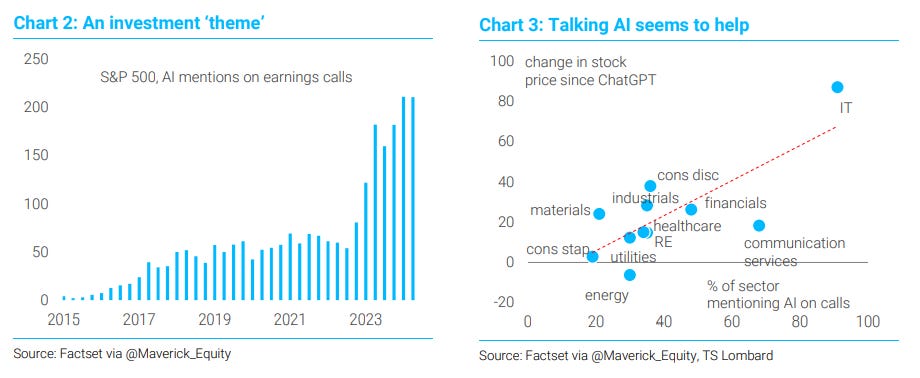

There has been a lot of hype around AI since the launch of ChatGPT, as we can see from the number of times this technology has been mentioned on analyst earnings calls. AI chatter spread across all sectors of the US market, even those not immediately associated with digital automation. But for the most part, it is the companies supplying the “picks and shovels” for the AI revolution that have performed best. (“Picks and shovels” is a popular phrase in finance – a reference to the mid-19th century Californian goldrush. You didn’t know who would find gold, but you wanted to be the person supplying the equipment needed to search for it.) And the biggest beneficiary, of course, has been NVIDIA. For decades, NVIDIA has specialized in making the chips and graphics processing units (GPUs) used in computer games. But a couple of years ago, its hardware suddenly found much wider use: crypto mining, self-driving cars and, most important, training of AI models. With Big Tech engaged in an AI arms race since the launch of ChatGPT, NVIDIAs earnings have surged – rising from US$12 billion in 2019 to US$120 billion today

Kalecki and NVIDIA

There is no mystery behind NVIDIA’s spectacular success. In economics, there is a famous (often misused) accounting identity – the Kalecky/Levy equation – which shows that corporate profits must always grow in line with business investment (at least when you focus on the private sector and ignore the trade position). The intuition is simple. When one company invests more, it boosts the earnings of other companies (its suppliers) without generating an offsetting cost (because investment is not treated as an operating cost). From a profitability point of view, this makes an increase in capex quite different from an increase in household spending. When consumption increases, revenues rise, too; but because consumers tend to spend out of their wages (which are a cost for the corporate sector), the impact on profits is more ambiguous. When we think about the NVIDIA story from the Kalecky-Levy perspective, the company’s earnings are just an aggregation of its customers’ massive capital expenditures. And NVIDIA’s customer base is heavily concentrated. Microsoft accounts for 20% of Nvidia's sales, while Meta Platforms, Alphabet, and Amazon account for 10%, 7%, and 6%, respectively, bringing the total to 42%. In short, NVIDIA has been a direct beneficiary of Big Tech AI “FOMO” (Fear Of Missing Out – the desire not to fall behind in this new technological arms race).

Is NVIDIA a bubble?

If there is a tech bubble concentrated in NVIDIA, it looks quite different from the Dotcom bubble of the 1990s. NVIDIA’s price is backed by its incredible earnings growth, unlike many of the companies that saw their valuations explode during the Dotcom era. (With a trailing PE of under 60, there is no comparison between NVIDIA and the likes of Cisco or Qualcomm in the late 90s, which traded at PEs of 530 and 175, respectively). That does not mean, however, that the company is not vulnerable to a major correction. Although NVIDIA’s Big Tech customers are planning continued strong AI capex in the coming years, there is no guarantee that Jensen Huang’s outfit will continue to capture the lion’s share of that market. Perhaps new competitors with superior GPUs will emerge or the likes of Microsoft and Meta will find a way to create their own hardware. We shouldn’t forget that the whole semi-conductor industry is infamously cyclical, and it is possible that big tech FOMO has already brought forward a lot of future demand. But even if the demand for NVIDIA’s product remains strong, it is going to be increasingly hard for the company to keep beating analysts’ expectations by such a wide margin (Chart 6). Remember, it is not just earnings that drive stock prices; it is earnings relative to (increasingly) bullish sentiment.

Market exposure to AI

Thanks to NVIDIA’s spectacular growth – and the AI theme more generally – the US stock market has become increasingly sensitive to a small number of Big Tech names. There are times when this concentration has become an obvious vulnerability – as we saw during the summer, when the Yen carry trade began to unwind, triggering a big correction in US tech stocks and a nasty risk-off episode in global markets. Realistically, we do not know if this was just the first taste of a much larger correctio, or whether NVIDIA and co. will quickly bounce back to their previous highs (and perhaps beyond). It is possible that even the “picks and shovel” theme has further to run and will spread to other sectors of the market such as energy; if big investment in AI continues, this trend will put enormous pressure on the US power grid. (Analysis from the Electric Power Research Institute shows US electricity demand could surge over the next decade, the first material increase since the 1990s.) But we can look at the underlying macroeconomics of AI and provide an assessment of whether this technology can really prove as transformational as its enthusiasts claim. If the tech underlying the AI thesis – particularly LLMs like ChatGPT – cannot live up to investor expectations, then it will be increasing hard for the Big Tech companies to make money from it. There has to be an underlying “use case”. And if the gold isn’t there to be found, eventually people will stop buying the picks and shovels.

2.BEYOND THE FOMO Eighteen months ago, we published a somewhat sceptical take on the transformative power of LLMs. We argued that industry insiders, such as CEO of OpenAI Sam Altman were wildly exaggerating the ability of LLMs to “deliver vast new wealth” and the warning that AI was about to “upend society” and deliver mass unemployment. Altman and his peers were not only talking their own book; their claims bordered on mysticism when it came to ability of these machines to replace human thinking. Since then, of course, we have learned a lot more about this technology, both in terms of its current capabilities and, looking ahead, whether we are on the “exponential path” that was promised. There has been a bull market in AI macro research, with a flurry of papers attempting to calibrate its impact on future economic growth and inflation. With these developments in mind, it is useful to provide an update. And to cut to the conclusion: we haven’t seen anything to shake our scepticism. While we are confident AI boosts productivity, it will struggle to match the gains of the Dotcom era, let alone justify the rhetoric of tech insiders.

A macro framework

Let’s start with a quick reminder of how generative AI works, particularly the latest vintage based on LLMs. In very crude terms, this is a system that has read a lot of stuff on the Internet and is predicting the next word in the sequence. When you ask ChatGPT a question, it converts each word into a number and then tries to predict the next number in the sequence, largely based on “learning” the associations between these numbers during billions of dataruns (its “training”) on a massive dataset (a snapshot of the entire Internet, albeit with quality controls). In one sense, these models are simply trying to autocomplete our sentences, based on things humans have written down previously; but they are doing this in an extremely sophisticated way, by trying to imitate the neural networks of the human brain. While ChatGPT produces words, there are other LLMs that can create digital photos, drawings and animations. With any new technology, the big hope is always that it will transform productivity – the key to unlocking growth and prosperity.

As Daron Acemoglu (2024) points out, there are several channels through which AI might (theoretically) unlock important efficiency gains:

(i) Simple automation – this would involve AI models taking over specific tasks and delivering better quality output faster and at a much lower cost. In the case of generative AI, specific examples include various mid-level clerical functions, text summary, data classification, advanced pattern recognition and computer vision.

(ii) Task complementarity – AI can increase the productivity of tasks that are not fully automated and may even increase the demand for labour. For example, workers performing certain tasks could have better information or superior access to other complementary inputs. Alternatively, AI may automate some subtasks, while at the same time enabling workers to specialize and raise their productivity in other areas.

(iii) Deepening of automation – AI may increase the productivity of capital in tasks that have already been automated. For example, an already-automated IT security task may be performed more successfully by generative AI.

(iv) Completely new tasks – AI could create totally new investment opportunities and products, which would increase the productivity of the entire production process. LLMs might expedite the R&D process, accelerate innovation and even speed up the pace of scientific discovery, expanding the efficient frontier of the economy.

AI and task efficiency

That’s the theory, but what does the evidence say? Nearly two years after the launch of ChatGPT, we have compelling evidence that AI can increase the efficiency of doing specific tasks (which is related to the productivity potential of channels (i) and (ii) in the list above). When it comes to areas like computer coding, writing assignments and even customer services, LLMs have been found to deliver a material efficiency boost in the range of 15-60% (Table 1). People using the AI found they were able to complete these tasks in less time and to a higher standard. Interestingly, most studies also recorded a levelling up effect – that is, it was the LLM users with the lowest levels of competence that saw the biggest efficiency gains. We particularly like the MIT study that asked 444 college-educated professionals to perform two simple (occupation-relevant) writing tests, allowing half of the group to use ChatGPT on the second task (with the other half as a control group). Their results showed a large improvement in productivity, with the average time spent on the second task dropping by 40%. And the MIT researchers also measured the quality of the output, using (blinded) evaluations from experienced professionals in the same occupation. The quality of the writing improved by 15%, with LLM users also reporting higher levels of job satisfaction and an increased sense of self-worth. Just a few months ago, the BIS recorded very similar results, this time for computer programmers completing coding tasks.

What about the more controversial claims that AI will accelerate the pace of scientific discovery? Here the evidence is sketchy, at best. AI optimists point to certain areas of biological research, where there have been some encouraging breakthroughs. DeepMind’s AlphaFold, for example, was able to predict the 3D structure of every known protein, a task that was predicted to take decades of human endeavour. But that was in 2021, and we haven’t exactly seen a burst of scientific breakthroughs since then. In fact, just last month, a paper by Zachary Siegel and others found that AI struggled to replicate existing scientific research, even when using the same code and data sources, which means it is still a long way from “expanding the frontier of human knowledge”. Based on what we know so far, the idea that AI will transform productivity by speeding up the pace of scientific discovery is largely conjecture rather than fact.

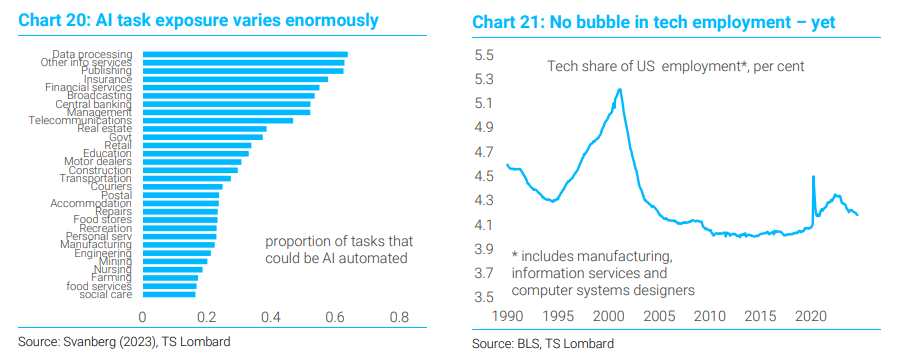

Aggregating the efficiency boost

While we can be confident that AI will boost the efficiency of doing specific tasks, even these gains are less than they seem when we translate them into a whole-economy impact. Glancing at Table 1, which shows efficiency gains of 15-60%, the casual observer might conclude that this is pretty impressive. But to estimate the effects on the whole economy, we have to take account of (i) the proportion of tasks that are exposed to AI automation and (ii) the share of those automatable tasks where it is actually profitable to carry out the automation. So far, Darren Acemoglu from MIT has provided the most comprehensive analysis of this question . His estimates suggest 20% of the tasks in the US economy will be exposed to automation over the next decade (based on separate analysis from Svanberg et al. (2023) – Chart 20), but that automation is profitable only for 23% of those exposed tasks. If we assume an average efficiency gain of 27%, that means a whole-economy productivity boost of just 1% over the next decade (27 multiplied by 0.23 multiplied by 0.2). That’s an extra 0.1% pts on US productivity growth per annum, a negligible improvement even by post-GFC standards.

Range of outcomes

If AI lifts productivity, it should also boost investment. So, the total impact on US GDP should be a little larger than Acemoglu’s headline estimates. But even allowing for stronger capex, these calculations fall a long way short of all the recent hype from the tech industry. It is possible, of course, that Acemoglu is being too pessimistic. If we change any of his assumptions – such as the share of tasks that are exposed to AI or the speed over which the automation will happen – we end up with much punchier estimates. And over the past two years, there have been plenty of other economists – particularly those who work in the management consultancy industry – who have done exactly that, producing estimates that are often 10 times higher than Acemoglu’s (published to much fanfare in glossy publications and with fancy graphics). Chart 17 shows this has delivered an enormous range of potential outcomes, which only underscores the uncertainties associated with AI automation. But even if we take an average of the estimates in Table 2, which overweights the management consultants, we are still looking at an impact that would fall short of the productivity gains we saw in the late 1990s with the Dotcom boom. And in view of the actual evidence available so far, we should lean towards the conservative findings.

AI limitations are bullish!

To really buy into the AI hype, you have to believe that these technologies will continue to improve at a rapid pace (Sam Altman’s “Moore’s law for everything”),and that LLMs have put us on an exponential path to AGI – fully self-aware machines that can perform any intellectual task that a human can perform. But as we explained in our previous publication, this is a very strong assumption. Anyone who has used these models knows they have major limitations, particularly in terms of their reliability and trustworthiness. The models hallucinate and there is no evidence that such issues will disappear just by “scaling” them (i.e., training them on more and more data). Almost two years after the launch of ChatGPT, these problems are still very much evident. In fact, Gary Marcus – whose work we highlighted in our previous Macro Picture – believes we have already reached the point of diminishing returns. Marcus jokes “we are still in the same place as two years ago, only with much better graphics”. Ironically, from a broader societal point of view, these inherent flaws in LLMs could turn out to be a “good” thing. If AI continues to have reliability problems, it is always going to need a degree of human oversight. And in that scenario, we are talking about a technology that is likely to be “skill augmenting” rather than labour displacing. Put another way, AI will help humans become more efficient in their jobs, but it is not going to cause mass unemployment or societal breakdown. (Which means it will be like every previous technological breakthrough – surprise, surprise!)